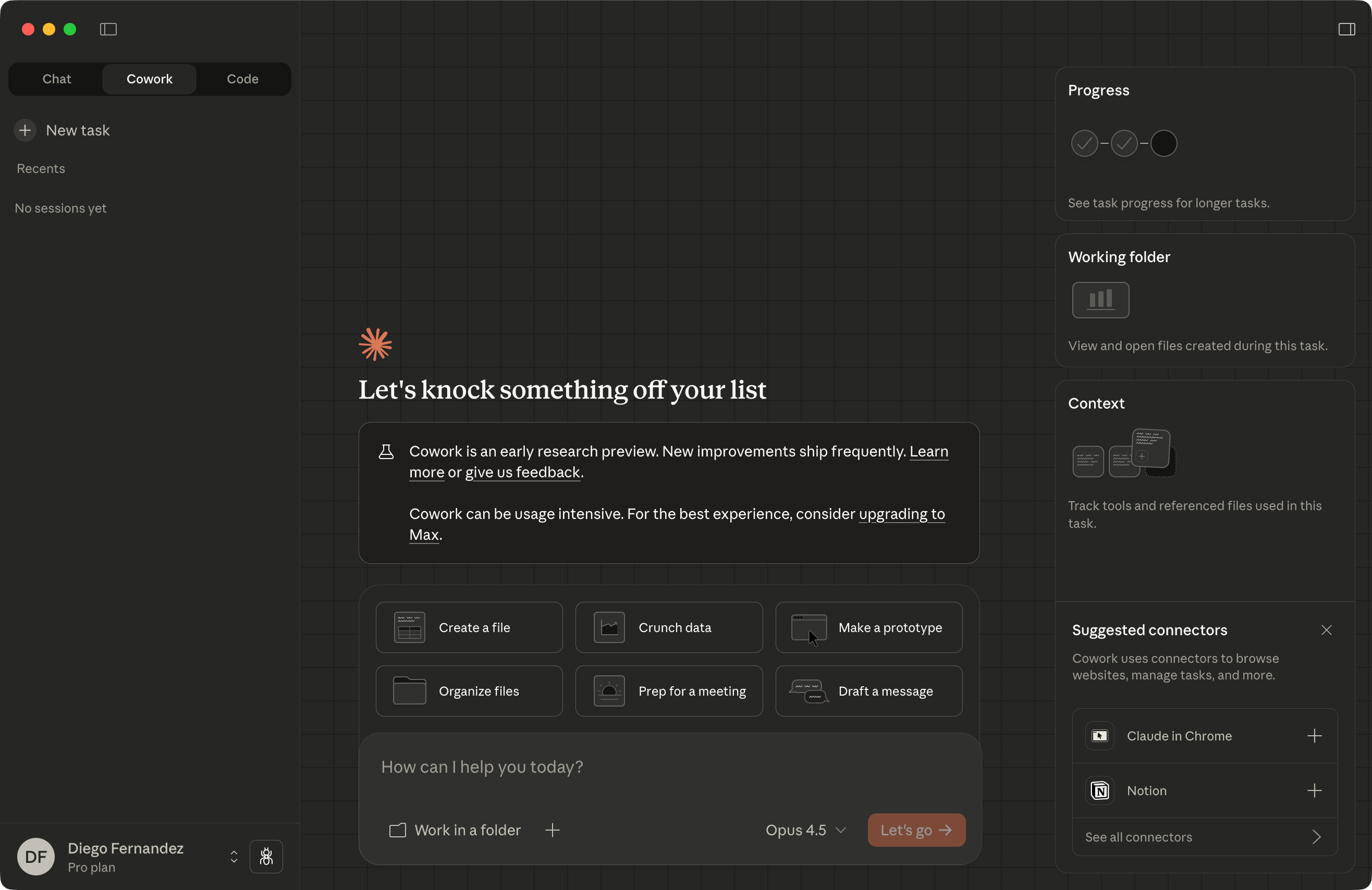

Claude Code has been a success among developers, and naturally, Anthropic wants to extend this success to Claude Cowork. But, using AI agents for anything beyond coding hasn’t quite caught on yet. Even the OpenAI agent features in ChatGPT haven’t achieved massive adoption.

Why the disparity? The answer lies in the safety nets we’ve built for software development.

Claude Cowork extends the Claude Code workflow beyond coding, yet agent adoption outside development remains limited.

The Git Advantage

Version control tools, like Git, solve the fundamental problems of an “agentic world” by default:

-

Audit Trails: Every change in the version control history has an author. In tools like Git, you can even record changes on behalf of other authors and include digital signatures to validate the authorship.

-

Rollbacks: Each committed change can be undone.

-

Parallel Exploration: It’s easy to explore different solutions by creating branches.

-

Troubleshooting: From diffs to bisect, there are many ways to troubleshoot issues caused by changes.

-

Common interface: The command-line and text outputs provide a shared interface that is independent of the editor UI. Moreover, git libraries and tools have special output formats that are easier to read in editors.

Compare this to what happens when you move outside of the software development world into tools like Microsoft 365, Figma, Google Docs, etc.:

-

Limited audit trail: Most of these tools include versioning with authorship, but features like co-authorship or author verification are restricted.

-

Undo is limited, and many actions are not saved in the history. Manually reversing changes is also difficult, with user interfaces that are slow and limited (e.g., the Figma version history).

-

Parallel exploration is either impossible or basic. Figma offers a branch feature, but it’s very limited and only available to certain tiers. Office suites from Microsoft or Google don’t support branching, and even comparing two file versions can be cumbersome (the MS Word document comparison feature hasn’t changed much since Word 2007).

-

Each system API is different.

The “Real World” Undo Problem

At least for document-oriented apps, you can always keep a copy and run the agent in a sandbox using it, as Claude Cowork does. But what happens to other types of apps?

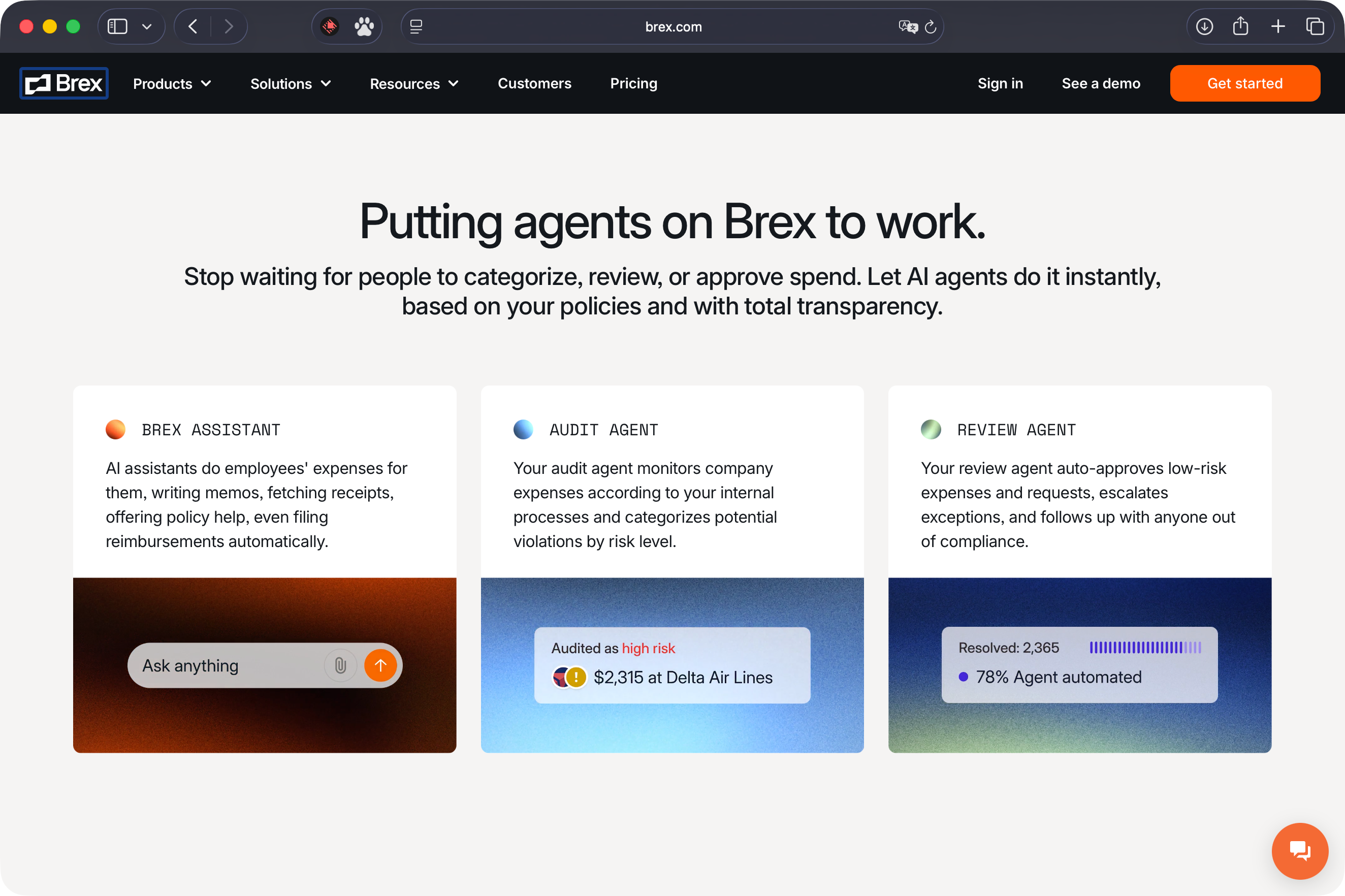

Imagine asking an agent to make a plan to optimize your investment portfolio. But suddenly, the agent begins executing trades and selling off assets.

Nowadays, more financial products, like Brex, are adopting AI agent capabilities.

Currently, we rely on human-in-the-loop permission models: pop-ups asking, “Allow this tool to run?” However, decades of UI research show that humans are prone to slips. We often click “Ok” to dismiss annoying dialog boxes without reading the details. It’s also hard to understand the effects of granting permissions. For example, when asked “Allow any calls to XYZ tool?”, you might click “Yes” because otherwise the agent can’t access your portfolio data, but you may not realize that this permission could also allow modifications. It all depends on how the tool’s operations are designed and presented to the agent.

In an agentive world, we may need to implement measures to prevent automation-related disasters. For instance, a system can use authentication to distinguish actions taken by automation from those by humans, and incorporate delays or human-in-the-loop verification steps.

Introducing “Agentibility”

In software architecture, we use quality attributes (the well-known “-ilities”) to guide our decisions: Usability, Maintainability, Scalability, Reliability, etc. These attributes help us establish metrics and design better systems.

It is time to introduce a new one: Agentibility.

Agentibility is the ability of a system to be used by an AI agent in a way that is safe, effective, and trustworthy for the human user.

Just as Usability measures how well a human can interact with a system, Agentibility assesses how effectively an agent can interact with it on a human’s behalf.

The Pillars of Agentibility

-

Agent-optimized APIs: Not all APIs are equal. A system with high Agentibility offers interfaces specifically designed for LLM consumption.

-

Distinguish agents from humans: The system must differentiate between “User A” and “Agent acting on behalf of User A.” This distinction is crucial for security, auditing, usability, and rolling back bulk changes. For example, if an agent fills out a CRM entry, those fields might be highlighted or tagged so users can review and understand the modifications.

-

Built-in Reversibility: This is the holy grail. For a system to have high Agentibility, changes made during an agent session need to be easily reversible.

-

Feedback Loops: The system must give immediate, structured feedback to the agent (and by extension, the user). Did the action succeed? If not, why?

Why It Matters

The adoption of agents at the OS level is inevitable. Microsoft, Apple, and Google are already deeply integrating AI into their ecosystems. But until our applications reach high levels of Agentibility, these integrations will remain inconsistent, risky, and unreliable. Also, even if OS-level integration takes time to become widespread, as web applications incorporate chat assistants everywhere, the next logical step is to evolve those chat assistants into tools that automate your daily tasks.

We have solved this for coding. Now, we need to solve it for everything else.